Sensitivity and specificity in medical testing overview explores the crucial metrics used to evaluate diagnostic tests. Understanding these concepts is essential for accurate clinical decision-making, as high sensitivity and specificity are vital for reliable diagnosis and treatment. This overview delves into the definitions, importance, and practical applications of these metrics, providing a comprehensive understanding of their roles in modern healthcare.

From the history of evaluating these metrics to the impact of disease prevalence, this overview will cover the formulas, implications of high and low values, and examples of tests with high sensitivity or specificity. We’ll examine how test design and disease severity affect these crucial measures. The discussion will also incorporate ROC curves and explore the limitations and future directions in medical testing.

Introduction to Medical Testing

Medical testing plays a crucial role in modern healthcare, aiding in the diagnosis, monitoring, and management of various diseases. These tests provide valuable information about a patient’s health status, allowing physicians to make informed decisions about treatment plans. A key aspect of evaluating medical tests is understanding their sensitivity and specificity. These metrics are essential for determining the accuracy and reliability of diagnostic tools.Sensitivity and specificity are crucial metrics in medical testing, reflecting the ability of a test to correctly identify those with and without a particular condition.

Understanding these metrics is vital for clinical decision-making. A test with high sensitivity correctly identifies most individuals with the condition, while high specificity ensures the test accurately identifies those without the condition. The interplay between these two factors directly influences the reliability of a diagnosis and the subsequent treatment strategies.

Definition of Sensitivity and Specificity

Sensitivity measures the proportion of individuals with a condition who test positive. A highly sensitive test correctly identifies most individuals with the condition. Specificity, conversely, measures the proportion of individuals without a condition who test negative. A highly specific test correctly identifies most individuals without the condition. These metrics are often expressed as percentages.

Importance in Clinical Decision-Making

Sensitivity and specificity are vital in clinical decision-making because they directly impact the accuracy and reliability of diagnostic results. For example, a test with high sensitivity is crucial for ruling out a condition when negative, while a test with high specificity is essential for confirming a condition when positive. The choice of a diagnostic test depends on the clinical context, the potential implications of a missed diagnosis, and the specific needs of the patient.

Relationship Between Sensitivity and Specificity

The relationship between sensitivity and specificity is often inverse. Improving one characteristic may result in a trade-off with the other. For instance, increasing the sensitivity of a test might decrease its specificity, and vice versa. Clinicians must carefully consider this trade-off when selecting a diagnostic test for a particular patient. The ideal test would exhibit high values for both sensitivity and specificity, but this is often not attainable.

History of Evaluating Sensitivity and Specificity

The development of methods for evaluating sensitivity and specificity has evolved alongside the advancement of medical technology. Early methods relied on simple observation and clinical experience. As statistical methods improved, more sophisticated techniques emerged, allowing for more precise calculations and interpretations of test performance. The use of receiver operating characteristic (ROC) curves, for instance, provided a visual representation of the trade-off between sensitivity and specificity across different thresholds.

These developments led to a more standardized and evidence-based approach to evaluating diagnostic tests.

Comparison of Medical Tests

| Test Type | Sensitivity (%) | Specificity (%) | Description |

|---|---|---|---|

| Rapid HIV test | 95 | 99 | Rapid HIV tests are highly sensitive and specific, providing quick results for preliminary diagnosis. |

| Chest X-ray for pneumonia | 80 | 90 | Chest X-rays are useful for detecting pneumonia, but their sensitivity and specificity can vary depending on the severity and stage of the condition. |

| Complete Blood Count (CBC) for anemia | 90 | 95 | CBCs are used to diagnose anemia, providing valuable information about the blood components. |

| Pregnancy test | 99 | 98 | Pregnancy tests are highly sensitive and specific for confirming pregnancy. |

This table illustrates the sensitivity and specificity of various medical tests, highlighting their practical applications in different clinical scenarios. The figures presented are approximate and may vary depending on the specific test, the population being tested, and the presence of other underlying conditions.

Sensitivity

Sensitivity in medical testing is a crucial aspect of evaluating diagnostic tools. It quantifies the test’s ability to correctly identify individuals with a disease. A highly sensitive test is less likely to miss a case of the disease, while a less sensitive test might overlook some individuals who actually have the condition. Understanding sensitivity is vital for interpreting test results and making informed decisions in various medical contexts.Sensitivity, in essence, measures the proportion of individuals with the disease who test positive for it.

It’s a critical measure in disease screening and diagnosis, as a high sensitivity minimizes the risk of false negatives.

Definition and Formula

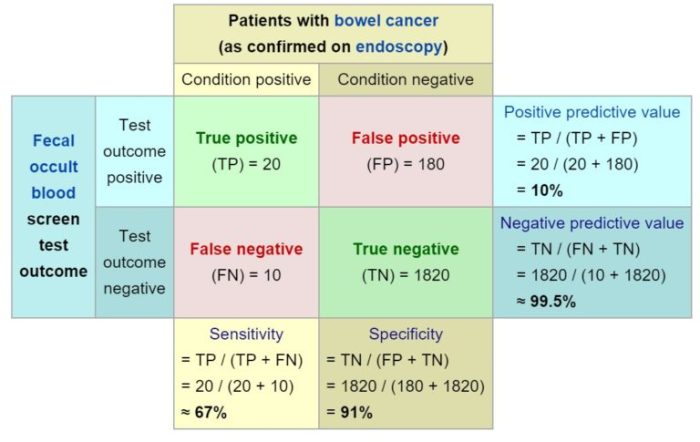

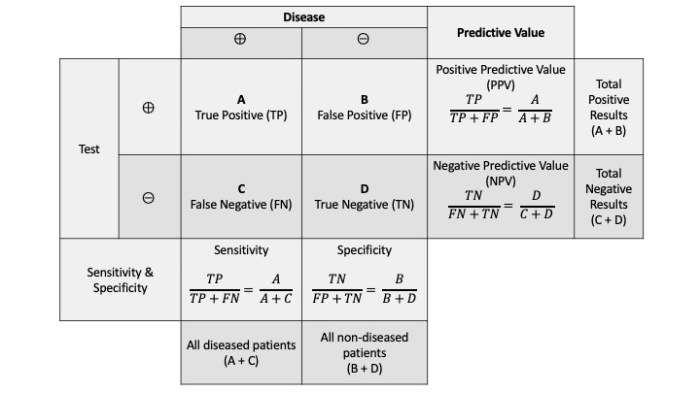

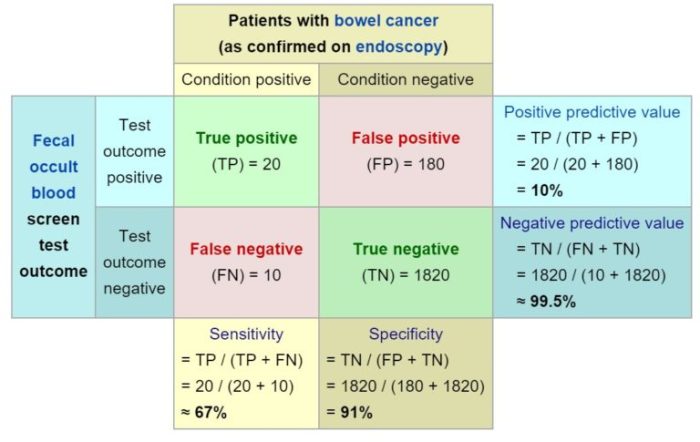

Sensitivity is the proportion of individuals with a disease who test positive for that disease. It represents the test’s ability to correctly identify individuals with the condition. Mathematically, sensitivity is calculated as:

Sensitivity = (True Positives) / (True Positives + False Negatives)

This formula highlights the key components of sensitivity: true positives (correctly identifying those with the disease) and false negatives (incorrectly identifying those without the disease).

Implications of High and Low Sensitivity Values

High sensitivity is desirable in screening tests for diseases with potentially serious consequences or when early detection is crucial. In such cases, minimizing false negatives is paramount. Conversely, low sensitivity might be acceptable in situations where the disease is less severe, or when the test is part of a diagnostic process involving multiple steps.

Understanding sensitivity and specificity in medical tests is crucial. These metrics essentially tell us how well a test identifies those with a condition and correctly rules out those without it. For instance, when considering conditions like PMDD, knowing the accuracy of diagnostic tests is vital. PMDD facts and statistics highlight the prevalence and impact of this condition, which directly relates to the importance of accurate diagnostic tools.

Ultimately, the better our tests, the more effectively we can identify and treat the condition, improving patient outcomes.

Examples of Medical Tests with High Sensitivity

Several medical tests demonstrate high sensitivity and are valuable tools in healthcare. For example, complete blood counts (CBCs) are highly sensitive to various blood disorders. The presence of specific markers, like elevated white blood cell counts, often indicate an infection, even in its early stages. Similarly, certain imaging techniques, like chest X-rays, show high sensitivity for detecting pneumonia.

This sensitivity allows for early intervention and appropriate treatment.

Influence of Disease Prevalence on Sensitivity

The prevalence of a disease plays a role in the interpretation of sensitivity. A rare disease will likely have fewer true positives, potentially impacting the sensitivity of a test. In contrast, a more prevalent disease will have a higher proportion of true positives, which can improve the test’s apparent sensitivity.

Sensitivity vs. Specificity

Sensitivity is often contrasted with specificity. While sensitivity focuses on correctly identifying those with a disease, specificity emphasizes correctly identifying those without the disease. A high specificity means a low chance of a false positive result. Understanding both sensitivity and specificity is crucial for comprehensively evaluating a diagnostic test’s performance.

Impact of Test Design on Sensitivity

The design of a medical test significantly influences its sensitivity. For instance, a test that uses a more rigorous detection method or a wider range of markers is more likely to identify individuals with the disease. Likewise, the test’s cutoff values (the threshold for a positive result) also affect sensitivity.

Sensitivity of Different Tests for [Specific Disease Example: Pneumonia]

| Test | Sensitivity (%) |

|---|---|

| Chest X-ray | 80-95 |

| CT scan | 90-98 |

| Blood tests (procalcitonin) | 70-85 |

Note: The sensitivity values are approximate ranges and may vary depending on the specific population and testing conditions.

Specificity

Specificity in medical testing measures the accuracy of a negative result. It quantifies how well a test correctly identifies individuals without the condition. A highly specific test minimizes false positives, which are crucial in avoiding unnecessary interventions and anxiety.Specificity, in essence, is the proportion of individuals without a disease who test negative. It is a vital complement to sensitivity, as both are necessary for a comprehensive understanding of a diagnostic test’s performance.

Definition and Formula

Specificity is calculated as the number of true negatives divided by the total number of individuals without the disease. A higher specificity indicates a lower likelihood of a false positive result.

Specificity = True Negatives / (True Negatives + False Positives)

Implications of High and Low Specificity

High specificity is desirable in medical testing, reducing the risk of misdiagnoses and unnecessary treatments. A high specificity test is more likely to accurately identify individuals without the disease. Conversely, low specificity increases the chance of false positives, potentially leading to unnecessary interventions, anxiety, and wasted resources.

Examples of Tests with High Specificity

Many tests exhibit high specificity, playing crucial roles in diagnosis and management. For instance, blood tests for certain infections, such as those detecting antibodies to HIV, often have high specificity. These tests can effectively identify those without the infection, reducing the chance of misdiagnosis. Genetic tests for specific genetic disorders also often demonstrate high specificity, accurately identifying those who do not carry the genetic mutation.

Influence of Prevalence on Specificity

The prevalence of a disease can influence specificity. In diseases with low prevalence, a high specificity test may appear to have a lower overall accuracy, as there are fewer individuals with the disease in the population. The test may be more likely to be negative in the absence of the disease due to a larger proportion of negative test results from the overall population.

Understanding sensitivity and specificity in medical tests is crucial for accurate diagnoses. For instance, if you’re experiencing finger locking, determining the cause requires tests with high sensitivity and specificity to rule out various conditions. This is important to ensure the correct diagnosis and treatment, like exploring the causes of finger locking, which can be found at why do my fingers lock up.

Ultimately, these testing parameters are key to avoid misdiagnosis and provide the best possible care.

This phenomenon does not imply a change in the test’s inherent ability to differentiate between those with and without the disease, but rather a reflection of the population being tested.

Understanding sensitivity and specificity in medical tests is crucial. A test’s sensitivity measures its ability to correctly identify those with a condition, while specificity focuses on its accuracy in correctly identifying those without the condition. For example, if you’re concerned about a positive HIV test result, it’s essential to understand that while a test with high sensitivity and specificity is generally reliable, it’s always wise to consider follow-up testing and resources, such as what if my hiv test is positive.

This ensures you have the correct information and can make informed decisions about your health. Ultimately, understanding these factors in medical testing can empower you to navigate health concerns with greater confidence.

Comparison with Sensitivity

Specificity and sensitivity are distinct yet complementary concepts. Sensitivity focuses on correctly identifying individuals with the disease, while specificity focuses on correctly identifying those without it. Both are essential for evaluating the overall performance of a medical test. Consider a test for a rare cancer: high sensitivity might be crucial to detect early cases, but high specificity is essential to avoid unnecessary treatments and anxieties in those without the cancer.

Impact of Test Design on Specificity

Test design plays a significant role in determining specificity. Well-designed tests utilize precise methodology and standardized procedures to minimize false positives. For example, in laboratory tests, meticulous control of reagents and equipment calibration are crucial for high specificity. Improved test design can lead to reduced variability and enhanced accuracy, thus minimizing false positives.

Specificity of Different Tests for [Specific Disease Example: COVID-19]

| Test Type | Specificity (Estimated) | Description |

|---|---|---|

| PCR | 95-99% | Highly sensitive and specific for detecting viral genetic material; can be impacted by viral load. |

| Rapid Antigen Test | 80-95% | Faster than PCR, but lower specificity; can miss some infections. |

| Antibody Test | 90-98% | Identifies prior exposure; not as accurate for active infection. |

Note: Specificity values can vary depending on the specific test, methodology, and population tested.

Factors Affecting Sensitivity and Specificity

Understanding the sensitivity and specificity of medical tests is crucial for accurate diagnosis and treatment. These metrics, while important, are not static. Several factors influence their values, impacting how reliable a test is in different clinical scenarios. These factors need careful consideration when interpreting test results and determining their usefulness in specific patient populations.

Disease Prevalence

Disease prevalence significantly impacts the interpretation of test results. A higher prevalence of a disease means a higher proportion of the tested population will have the condition. This, in turn, can affect the apparent sensitivity and specificity of a test. For example, a test with high sensitivity and specificity for a rare disease might appear less sensitive and specific when used in a population with a low prevalence of that disease.

This is because the test may be less likely to correctly identify the disease in a population where few individuals actually have it. The positive predictive value (PPV) and negative predictive value (NPV) of a test are also impacted by prevalence.

Test Cut-off Points

Test cut-off points are critical in determining sensitivity and specificity. These points define the threshold at which a test result is considered positive or negative. Adjusting the cut-off point can alter the balance between sensitivity and specificity. A lower cut-off point increases the chance of identifying individuals with the condition (higher sensitivity) but may also increase false positives (lower specificity).

Conversely, a higher cut-off point decreases false positives (higher specificity) but may also miss some individuals with the condition (lower sensitivity). The optimal cut-off point depends on the specific clinical context and the desired balance between these two metrics.

Disease Severity

Disease severity can influence test performance. In some cases, the severity of the disease may affect the ability of the test to detect the condition. For instance, in early-stage diseases, the test might not show significant changes, leading to lower sensitivity. In advanced stages, the test may produce more pronounced results, but the clinical picture might already be evident, making the test less crucial.

The sensitivity and specificity might also be affected by the stage of disease progression.

Test Cost and Trade-offs

The cost of a medical test is often intertwined with its sensitivity and specificity. More sophisticated and accurate tests often come with higher costs. This creates a trade-off between the desired level of accuracy and the financial implications for patients and healthcare systems. Clinicians must consider the cost-effectiveness of a test in relation to its diagnostic yield and the overall clinical picture.

This involves weighing the potential benefits of increased accuracy against the financial burden and potential for over-testing.

Factors Influencing Sensitivity and Specificity

| Factor | Impact on Sensitivity | Impact on Specificity |

|---|---|---|

| Disease Prevalence | Can appear lower in low-prevalence populations | Can appear lower in low-prevalence populations |

| Test Cut-off Points | Lower cut-off increases sensitivity, but increases false positives | Higher cut-off increases specificity, but decreases sensitivity |

| Disease Severity | Early stages may yield lower sensitivity | Advanced stages may yield higher sensitivity, but may not be needed clinically |

| Test Cost | Higher cost tests may have higher sensitivity | Higher cost tests may have higher specificity |

Applications and Examples: Sensitivity And Specificity In Medical Testing Overview

Understanding sensitivity and specificity is crucial for effectively utilizing medical tests in clinical practice. These metrics, when properly interpreted, can significantly aid in diagnosing diseases, guiding treatment strategies, and ultimately improving patient outcomes. Their application extends across various medical specialties, from infectious disease to oncology.

Examples of Medical Tests in Different Clinical Settings

Medical tests vary greatly depending on the specific condition being investigated. Some common examples include blood tests, imaging techniques (like X-rays and CT scans), and genetic tests. A blood test for glucose levels is a simple, widely used screening tool for diabetes. Imaging techniques like X-rays are crucial for detecting fractures or pneumonia. Genetic tests can identify predispositions to certain diseases, like cancer.

The choice of test depends on the suspected diagnosis, available resources, and the patient’s specific needs.

Practical Applications in Diagnosing Diseases

Sensitivity and specificity play a vital role in disease diagnosis. A test with high sensitivity correctly identifies individuals with the disease, minimizing the risk of false negatives. Conversely, high specificity ensures that the test correctly identifies those without the disease, minimizing false positives. For example, a test for HIV with high sensitivity would accurately identify most individuals infected, preventing delayed treatment.

High specificity would ensure that those without HIV are not mistakenly labeled as positive, avoiding unnecessary anxiety and potential treatment complications.

Guiding Treatment Decisions

Test results, informed by sensitivity and specificity, can significantly influence treatment decisions. For instance, a test for prostate cancer with high specificity can help avoid unnecessary biopsies in patients who don’t have the disease. Conversely, a test with high sensitivity can help ensure that individuals with the disease receive timely intervention. The balance between sensitivity and specificity is critical in making the best treatment decisions, as over-reliance on one metric can lead to significant errors.

Importance of Considering Both Metrics

Interpreting test results requires considering both sensitivity and specificity. A test with high sensitivity but low specificity might yield many false positives, while a test with high specificity but low sensitivity might miss cases of the disease. This necessitates a careful evaluation of the clinical context and the potential consequences of both types of errors.

Prioritizing High Sensitivity Over High Specificity

In certain situations, prioritizing high sensitivity over high specificity is warranted. For example, in screening for a disease with serious consequences, such as cancer, it is often preferable to identify as many possible cases as possible, even if it means more false positives. Early detection can significantly improve outcomes. A highly sensitive screening test might identify individuals who would otherwise be diagnosed later, when treatment options might be limited.

Real-World Case Studies, Sensitivity and specificity in medical testing overview

A case study involving a rapid diagnostic test for influenza could illustrate the importance of both sensitivity and specificity. High sensitivity would be crucial to quickly identify individuals with influenza and implement preventive measures. High specificity would be equally important to avoid unnecessary quarantines and treatment in those who do not have the disease. The combination of high sensitivity and specificity would help in efficiently managing the outbreak.

Table of Medical Tests and Their Metrics

| Medical Test | Sensitivity (%) | Specificity (%) | Clinical Application |

|---|---|---|---|

| Blood Glucose Test | 95 | 98 | Diabetes screening |

| Pap Smear | 80 | 90 | Cervical cancer screening |

| Chest X-Ray | 90 | 95 | Pneumonia diagnosis |

Receiver Operating Characteristic (ROC) Curves

ROC curves are powerful tools in medical diagnostics. They provide a visual representation of the performance of a diagnostic test across different possible cut-off points. This allows clinicians and researchers to evaluate the trade-off between sensitivity and specificity and choose the optimal cut-off for a given situation.Understanding ROC curves is crucial for interpreting diagnostic test results effectively. By plotting sensitivity against 1-specificity, we gain a comprehensive picture of how the test performs at different thresholds.

This is particularly helpful when a single cut-off point isn’t ideal for all patients or populations.

Interpreting ROC Curves

ROC curves plot sensitivity (true positive rate) on the y-axis against 1-specificity (false positive rate) on the x-axis. Each point on the curve represents a different cut-off point for the diagnostic test. A perfect test would have a curve that hugs the top-left corner of the graph, achieving 100% sensitivity and specificity at all cut-offs. Real-world tests typically fall somewhere along the curve, showing the trade-off between correctly identifying positives and avoiding false positives.

Choosing an Optimal Cut-off Point

The optimal cut-off point on an ROC curve is the one that maximizes the balance between sensitivity and specificity, depending on the specific clinical context. A higher cut-off will generally increase specificity, but may decrease sensitivity, while a lower cut-off will increase sensitivity but potentially increase false positives. Consider the clinical implications of both outcomes when choosing the best cut-off.

For example, a test for a serious condition might prioritize sensitivity to catch all cases, even at the cost of more false positives, which can be managed by additional tests. Conversely, a test for a less serious condition may prioritize specificity to avoid unnecessary interventions, potentially sacrificing some sensitivity.

Area Under the Curve (AUC)

The area under the ROC curve (AUC) is a single numerical summary of the overall performance of a diagnostic test. AUC values range from 0.5 to 1.0. An AUC of 0.5 indicates a test that performs no better than random chance, while an AUC of 1.0 indicates a perfect test. A higher AUC suggests a better test, meaning the test is more likely to correctly distinguish between those with and without the condition.

An AUC above 0.9 is generally considered excellent.

Visual Representation of a Typical ROC Curve

Imagine a graph with sensitivity on the y-axis and (1-specificity) on the x-axis. A curve connecting various points on this graph represents the ROC curve. Each point on the curve corresponds to a specific threshold for classifying a result as positive or negative. A point near the top-left corner signifies high sensitivity and specificity at that threshold. A diagonal line from the bottom-left to the top-right represents a test with no discriminatory power.

A curve closer to the top-left corner indicates a better diagnostic test.

Test Characteristics Related to ROC Curves

| Characteristic | Description |

|---|---|

| Sensitivity | Proportion of true positives correctly identified. |

| Specificity | Proportion of true negatives correctly identified. |

| False Positive Rate (FPR) | Proportion of true negatives incorrectly identified as positive. (1 – Specificity) |

| False Negative Rate (FNR) | Proportion of true positives incorrectly identified as negative. (1 – Sensitivity) |

| Cut-off Point | The threshold value used to classify a result as positive or negative. |

| Area Under the Curve (AUC) | Overall measure of test performance, ranging from 0.5 to 1.0. |

Limitations and Challenges

Medical testing, while crucial for diagnosis and treatment, faces inherent limitations. Sensitivity and specificity, though valuable metrics, are not absolute guarantees. Understanding their limitations is essential for responsible interpretation and application. Factors like the complexity of diseases, the variability of patients, and the inherent limitations of the tests themselves influence the accuracy of results. This section delves into these challenges, highlighting potential biases and the importance of considering diverse populations when evaluating these metrics.

Limitations of Sensitivity and Specificity

Sensitivity and specificity, while fundamental to evaluating a medical test, are not perfect measures. They represent probabilities, not absolute certainties. A test with high sensitivity might miss some cases of a disease, while one with high specificity might falsely identify healthy individuals as diseased. This inherent probabilistic nature limits their usefulness in absolute terms. For example, a test with 95% sensitivity may still fail to detect the disease in 5% of cases, leading to a missed diagnosis.

Similarly, a test with 98% specificity might misclassify 2% of healthy individuals as diseased, leading to unnecessary interventions or anxieties.

Challenges in Accurately Determining Metrics

Determining precise sensitivity and specificity values presents challenges. Obtaining a large, representative sample of individuals with and without the disease is crucial. This can be difficult, especially for rare diseases, where the number of affected individuals is limited. Moreover, the definition of the disease itself can be ambiguous, potentially impacting the accuracy of the results. Variability in the disease presentation and the heterogeneity of the population being tested can further complicate the process.

Potential for Bias in Test Development and Interpretation

Bias in test development and interpretation can significantly influence the reported sensitivity and specificity. For example, if the test development process disproportionately focuses on a specific demographic group, the results may not be generalizable to other populations. Additionally, subjective interpretations of test results, especially in borderline cases, can introduce bias. Researchers and clinicians must be mindful of these potential biases to ensure the validity and reliability of the data.

For instance, if a test is developed and validated predominantly on a group with a certain genetic predisposition, it might not perform equally well in populations without that predisposition.

Role of Patient Variability in Influencing Test Results

Patient variability plays a critical role in influencing test results. Factors such as age, sex, overall health, and the presence of other medical conditions can affect the way a test performs. For example, a patient with a compromised immune system might exhibit different responses to a certain test compared to a healthy individual. The variability among patients makes it challenging to establish universally applicable sensitivity and specificity values.

This necessitates considering these factors during the testing process and interpretation of results.

Different Population Sensitivities and Specificities

Different populations may exhibit different sensitivities and specificities for the same test. Genetic variations, environmental factors, and cultural practices can influence how individuals respond to the test. For example, a test designed for a particular ethnicity might not be equally effective in another. Therefore, it is crucial to consider population-specific variations when interpreting results and using the test for diagnostic purposes.

Such variations necessitate careful consideration of ethnicity and other demographics in test development and validation.

Summary of Limitations and Challenges

| Category | Description |

|---|---|

| Test Limitations | Ambiguous disease definition, variability in disease presentation, inherent test limitations (e.g., false positives/negatives). |

| Sampling Challenges | Difficulty in obtaining representative samples, particularly for rare diseases. Heterogeneity of the population being tested. |

| Bias Potential | Bias in test development (e.g., focusing on specific demographics), subjective interpretation of results. |

| Patient Variability | Influence of age, sex, overall health, and co-morbidities on test results. |

| Population Variations | Genetic variations, environmental factors, and cultural practices affecting test performance across diverse populations. |

Future Directions

The field of medical testing is constantly evolving, driven by technological advancements and a growing need for more accurate and efficient diagnostic tools. This evolution promises to revolutionize how we detect, diagnose, and treat diseases, leading to better patient outcomes. Looking ahead, we can anticipate exciting developments in testing methodologies, with a strong focus on improving sensitivity and specificity, and integrating cutting-edge technologies.Emerging trends in medical testing are reshaping the landscape of diagnostics.

From innovative molecular techniques to sophisticated imaging technologies, the future holds significant potential for enhanced diagnostic capabilities. This progress is crucial in enabling earlier and more precise diagnoses, ultimately improving treatment strategies and patient care.

Emerging Trends in Medical Testing Methodologies

New technologies are constantly pushing the boundaries of what’s possible in medical testing. Microfluidic devices, for instance, are revolutionizing the way we perform diagnostics. These miniature lab-on-a-chip devices can perform multiple tests simultaneously on very small sample volumes, increasing efficiency and reducing costs. Furthermore, advancements in genomics and proteomics are leading to the development of highly sensitive tests for detecting biomarkers associated with various diseases.

These biomarkers can offer early disease detection, personalized treatment strategies, and a deeper understanding of disease mechanisms.

Potential for Improved Sensitivity and Specificity in Future Tests

“Increased sensitivity and specificity are key goals in medical testing, as they directly impact diagnostic accuracy and reduce false positive and false negative results.”

The development of highly sensitive and specific tests is a critical area of research. Advanced imaging techniques, such as PET scans and MRI, are continually being refined to improve their ability to detect subtle changes in tissue structure and function. Additionally, the use of advanced bioanalytical techniques like mass spectrometry and chromatography promises to enhance the detection of disease biomarkers, leading to more accurate diagnoses.

Consider the impact of more precise tests for cancer detection: earlier diagnoses and targeted therapies can significantly improve patient outcomes.

Use of Technology in Developing More Accurate Diagnostic Tools

Technological advancements are accelerating the development of more accurate diagnostic tools. For example, point-of-care testing devices are becoming increasingly sophisticated, allowing for rapid and convenient testing in various settings, including hospitals, clinics, and even at home. This accessibility is crucial for providing timely diagnoses, especially in remote areas or for patients with limited access to healthcare facilities. Moreover, the use of artificial intelligence (AI) is transforming diagnostic accuracy, by enabling the analysis of complex data sets from medical imaging and other sources.

Potential Applications of AI in Improving Diagnostic Accuracy

AI algorithms are being trained on vast datasets of medical images, patient records, and genomic information to identify patterns and anomalies that might be missed by human clinicians. This ability to detect subtle patterns in data could lead to earlier diagnoses and more accurate predictions of disease progression. For example, AI-powered diagnostic tools can assist in the analysis of medical images to identify subtle signs of disease, such as early cancerous lesions in mammograms.

Table Outlining Potential Future Advancements in Medical Testing

| Advancement | Description | Impact |

|---|---|---|

| Microfluidic Devices | Miniaturized lab-on-a-chip devices for rapid and multiplexed testing. | Increased efficiency, reduced costs, and smaller sample volumes. |

| Advanced Imaging Techniques | Refined PET and MRI scans with enhanced resolution and sensitivity. | Early detection of disease, more precise diagnosis, and improved treatment planning. |

| Bioanalytical Techniques | Mass spectrometry and chromatography for precise detection of biomarkers. | Enhanced detection of disease biomarkers, improved diagnosis accuracy, and personalized treatment. |

| Point-of-Care Testing | Portable and rapid diagnostic devices for convenient testing in various locations. | Improved accessibility to healthcare, particularly in remote areas. |

| AI-Powered Diagnostics | Algorithms trained on vast datasets for pattern recognition and disease prediction. | Improved diagnostic accuracy, earlier disease detection, and personalized treatment strategies. |

Final Thoughts

In conclusion, sensitivity and specificity are fundamental aspects of medical testing. Their importance lies in enabling accurate diagnoses and guiding effective treatments. Understanding the interplay between these metrics, along with factors influencing their values, is critical for reliable clinical practice. While limitations exist, ongoing advancements in medical testing technologies promise improvements in accuracy and efficiency. This overview offers a comprehensive understanding of these concepts and their practical applications in healthcare.